Overview

In my previous blog post (HERE) I talked about integrating Tanzu workload clusters on vSphere with Tanzu (TKGs) with WS1 access as external identity provider, I was glad to receive positive reactions on that but I also received couple of requests for covering the same topic but for Tanzu Kubernetes Grid (TKGm) since there are quite large number of customers adopting TKGm as part of their multi-cloud strategy so I decided to cover identity providers integration for TKGm in this post.

Integration with external identity providers in TKG leverages Project Pinniped to implement OIDC integration with external providers. Pinniped is an authentication service for Kubernetes clusters which allows you to bring your own OIDC, LDAP, or Active Directory identity provider to act as the source of user identities. A user’s identity in the external identity provider becomes their identity in Kubernetes. All other aspects of Kubernetes that are sensitive to identity, such as authorisation policies and audit logging, are then based on the user identities from your identity provider. A major advantage of using an external identity provider to authenticate user access to your Tanzu/Kubernetes clusters is that Kubeconfig files will not contain any specific user identity or credentials, so they can be safely shared.

You can enable identity management during or after management cluster deployment, by configuring an LDAPS or OIDC identity provider. Any workload clusters that you create after enabling identity management are automatically configured to use the same identity provider as the management cluster.

Authentication Workflow

Image below shows how users are getting authenticated to TKG workload clusters by means of OIDC or LDAP provider. TKG admin will need to provision TKG management cluster and configure OIDC or LDAP external identity providers and eventually for any subsequent created TKG workload clusters, Tanzu admin will need to provide TKG workload cluster kubeconfig file to end users who needs to use this kubeconfig to connect to TKG workload clusters. Once a user sends an access request to a TKG workload cluster, workload cluster will provide user information to pinniped service running on TKG management cluster which will authenticate towards the IdP (WS1 Access in my setup) and if user is found in directory services then IdP will generate an access token which user will use to be able to access the workload cluster.

Image source: https://docs.vmware.com/en/VMware-Tanzu-Kubernetes-Grid/1.6/vmware-tanzu-kubernetes-grid-16/GUID-iam-about-iam.html

Lab Inventory

For software versions I used the following:

-

- VMware ESXi 7.0U3g

- vCenter server version 7.0U3

- VMware WorkspaceONE Access 22.09.10

- Tanzu Kubernetes Grid 2.1.0

- WorkspaceONE Windows Connector

- VMware NSX ALB (Avi) version 22.1.2

- TrueNAS 12.0-U7 used to provision NFS data stores to ESXi hosts.

- VyOS 1.3 used as lab backbone router, Gateway, DNS and NTP

- Windows 2019 Server as DNS and AD.

- Windows 10 Pro as management host for UI access.

- Ubuntu 20.04 as Linux jumpbox for running tanzu cli and kubectl cli.

For virtual hosts and appliances sizing I used the following specs:

-

- 3 x ESXi hosts each with 12 vCPUs, 2 x NICs and 128 GB RAM.

- vCenter server appliance with 2 vCPU and 24 GB RAM.

Deployment Workflow

- Download TKG template image and upload to vCenter.

- Install Tanzu CLI on your Bootstrap machine.

- Deploy WorkspaceONE Access and WorkspaceONE Access connector and ensure that your AD users and groups are synchronised with WS1 Access successfully.

- Create a Web App pointing to pinniped service running on TKG management cluster as OIDC client.

- Deploy TKG management cluster and add WS1 Access as an external OIDC provider.

- Setup pinniped secret on management cluster.

- Create a workload TKG cluster and define user roles to be assigned to OIDC user.

- Login to workload TKG cluster with OIDC user and verify permissions.

Download TKG Template Image and Import it to vCenter

Step 1: Login to your VMware Customer Connect

To download TKG template images. navigate to your VMware customer connect portal and download a compatible TKG image, for my I setup I downloaded Photon v3 Kubernetes v1.24.9 OVA from this LINK.

Step 2: Import TKG template image as a VM template in vCenter

After we have downloaded the TKG template, login to your vCenter server and choose a location to where you want to deploy the ova, right click it and choose Deploy OVF Template

This will launch the Deploy OVF Template Wizard, choose local file and upload the TKG template OVA you have downloaded from VMware Customer Connect and click NEXT

Give a name to the VM to be deployed from the template and click NEXT

In my setup I make use of resource pools to limit resource consumption, so I created a specific location for my Template deployment

Review the details to make sure that you are working with the correct template and then click NEXT

Accept the EULA and then click NEXT

Next step is to choose a data store on which your template will be stored

In step 7 you choose to where template NIC will be attached, this is not used since during management cluster creation you will specify to which network (dvs portgroup) you want to connect your Tanzu nodes to.

Last step is to review the configuration parameters and then click FINISH

Once the VM is deployed, right click on it and choose Template > Convert to Template

Click on YES to convert the deployed VM to a template which will be used to deploy TKG management and workload clusters. Your TKG template should be visible under VM Templates

Download and Install Kubectl and Tanzu CLI on Bootstrap machine

Next step is to download and initialise Tanzu CLI along with Kubectl, this needs to be done on your Bootstrap machine, this is in my setup an Ubuntu VM from which I will start TKG management cluster creation and eventually creating and managing workload clusters.

You can download kubectl and Tanzu cli from VMware Customer Connect and follow the steps in VMware documentation (based on your OS) to initialise tanzu cli packages and prepare tanzu binaries.

Create an OpenID Connect Web App for TKG in WorkspaceONE Access

TKG management cluster uses Project Pinniped to implement OIDC integration (based on OAuth 2.0) with external providers. Pinniped is an authentication service for Kubernetes clusters which allows you to bring your own OIDC, LDAP, or Active Directory identity provider to act as the source of user identities. A user’s identity in the external identity provider becomes their identity in Kubernetes. In WorkspaceONE Access we need to create an OIDC client for our TKG management cluster, to do this login to WS1 Access admin console and navigate to Resources > Web Apps and click on NEW

Specify a Name to the new Web App and click NEXT

The authentication type must be OpenID Connect (OIDC) while both Target and Redirect URLs are also set to the same and for TKG with NSX ALB this needs to be set to https://<Avi assigned IP>/callback, while client ID is an identifier for your TKG pinniped service and needs to be set as well while we are deploying the management cluster. The client secret can be a random generated string using the below command on any Linux machine

openssl rand -hex 32

Note: the Target and Redirect URL can be set to any IP from the Avi VIP pool range and be corrected later when the management cluster is up and running by inspecting the pinniped loadbalancer service IP (discussed later in this post).

Make sure that both “Open in Workspace ONE Web” and “Show in User Portal” are unchecked, click NEXT and accept the default access policy

Click NEXT and then SAVE & Assign to assign the newly created OIDC client to directory users and groups

In my setup I assigned the newly created OIDC web app to an AD user called nsxbaas which is member of an AD group called Developers.

Last step in WS1 Access console is to set some remote access settings to our newly created OIDC web app, from WS1 access UI navigate to Settings > Remote App Access and then click on the newly created web app pinniped-tkg

This will open the OAuth 2 Client properties page, to the right side of SCOPE click on EDIT

Change the scope to be as below, this is basically setting permissions of what an OAuth client can access, so limiting this to User and Group means that our TKG clusters will be able to list user, email and group information of the user which will be used to authenticate against WS1 Access as IdP, click SAVE

Click on EDIT next to CLIENT CONFIGURATION section and make sure that “Prompt users for access” is unchecked, you can also set the TTL of the Access Token that will assign to authenticated users.

At this stage our WS1 Access configuration is done and next we will deploy our TKG management cluster.

Deploy TKG management cluster and add WS1 Access as an external OIDC provider.

In this section I will deploy TKG management cluster using the installer UI and as load balancing provider I will use NSX ALB (Avi) however I will not go through Avi preparation in this post, if you would like to revise or learn more about how to prepare Avi as load balancer provider for TKG then you can check one of my previous posts HERE.

Launch TKG installer UI and start installation wizard

From your Bootstrap machine use the following command to start Tanzu UI installer

tanzu mc create -u -b <listening interface ip>:8080 --browser none

From my Windows jumpbox I then navigated to the above URL and TKG Installer UI is available, click on DEPLOY which is under VMware vSphere

Fill in your vCenter address and credentials and once you click on CONNECT the installer will provide you the option to enable workload management (vSphere with Tanzu) however in this post we are deploying TKG with management cluster so you need to choose the option “Deploy TKG Management Cluster“

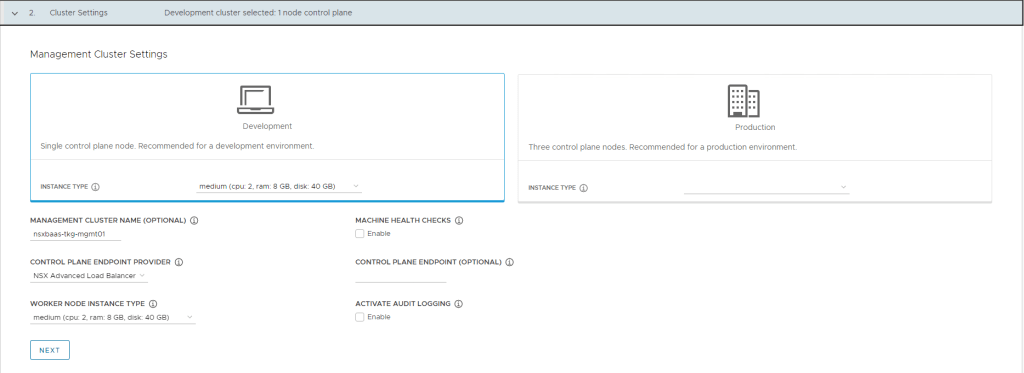

In step 2 of the TKG installer you need to choose the T-shirt sizes of your control and worker nodes, also make sure that you choose NSX Advanced Load Balancer (Avi) as endpoint provider

In step 3 of the deployment workflow you need to add NSX ALB (Avi) parameters, for more details on how to generate an Avi controller self-signed certificate and how to setup Avi you can reference one of my previous blog posts HERE.

Leave Metadata fields empty unless you want to add extra labels/metadata information to your cluster.

In step 5, you need to specify the compute resources in which your TKG clusters will be deployed

In step 6 you need to define where your TKG cluster nodes will be connected to, this is a portgroup in my DVS which is DHCP enabled. In the same step you need to define Pods and Services CIDRs. These are basically subnets from which Antrea (default CNI) will be assigning addresses to pods and cluster services.

In step 7 fill in your OIDC (WS1 Access) parameters which we defined in the previous step. Please note the ISSUER URL, this is different per OIDC provider, for WS1 Access it must include the /SAAS/auth sufix.

Step 8 is where you choose which TKG template to use, this is the template we created earlier in this blog post.

Next in step 9 you choose wether or not to participate in VMware CEIP program.

After you click NEXT, you will need to review the deployment parameters and if all is good then you start the deployment. If this is your first ever TKG management cluster deployment the process might take up to 90 minutes since bootstrap cluster image need to be downloaded, bootstrap cluster need to be created, Avi service engines need to be deployed and configured as part of Avi workflow and so on, so be patient. If all goes according to plan then you should see workflow deployment success as below and you should be able to verify that your TKG management is in running state by using the command “tanzu mc get” from your bootstrap machine.

Setup pinniped secret on management cluster

Once your TKG management cluster is deployed, login to it using the command “kubectl config use-context nsxbaas-tkg-mgmt01-admin@nsxbaas-tkg-mgmt01” and then inspect the pinniped pods and jobs running:

This is expected since we have not added the TLS certificate information of our WS1 Access node to pinniped configuration so pinniped post deployment jobs are failing to authenticate and establish SSL handshake with WS1 access. Before fixing this issue, we need to confirm which VIP address has been assigned from Avi to pinniped service, run the following command

kubectl get all -n pinniped-supervisor

Then you need to note the EXTERNAL-IP which is the loadbalancer address assigned from Avi to pinniped service, then you need to modify the OIDC client Redirect and Target URL configuration under Web Apps in WS1 Access console to use this IP address.

Next we need to retrieve WS1 Access self-signed root certificate and encode it in base64 since we will need to add this as a secret for pinniped service. To obtain WS1 Access root certificate, login to WS1 Access virtual appliance management UI (https://<ws1 access fqdn>:8443) then navigate to Install SSL Certificates then under Server Certificate choose “Auto Generate Certificate (self-signed)” then you should see the location from which you can download the root ca

click on it to download the certificate and then open the file, copy the contents to a file on a Linux machine and run the following command to generate a base64 encoded cert file

cat <root-ca-file> | base64 -w0 > ws1_access_base64_rootca.crt

The base64 encoded cert will be stored in the file called ws1_access_base64_rootca.crt, you will need to copy the contents of this file as we will need to paste it later in the pinniped secret.

Now to create pinniped secret, perform the following steps on your bootstrap cluster:

- Get the

admincontext of the management cluster

- Set the running context to admin context of TKG management cluster and set the following environment variables

export IDENTITY_MANAGEMENT_TYPE=oidc export _TKG_CLUSTER_FORCE_ROLE="management" export FILTER_BY_ADDON_TYPE="authentication/pinniped"

Once the above is in-place we need to generate pinniped secret CRD using the following command:

tanzu cluster create CLUSTER-NAME --dry-run -f CLUSTER-CONFIG-FILE > CLUSTER-NAME-example-secret.yaml

Where:

CLUSTER-NAMEis the name of your target management cluster.CLUSTER-CONFIG-FILEis the configuration file that you created above.

The environment variable settings cause tanzu cluster create --dry-run to generate a Kubernetes secret, not a full cluster manifest. In my setup, the generated secret looks like this:

Make sure that the secret is applied in the tkg-system namespace.

Important note: the highlighted entry is where you need to paste the base64 encoded WS1 Access root ca which we generated couple of steps earlier, then save the file and apply it to your TKG management cluster using the command:

kubectl apply -f CLUSTER-NAME-example-secret.yaml

If the connection from pinniped pods to WS1 Access is successful then you should see the pinniped pods in running state:

Create Workload Cluster and define user roles for OIDC User

In this step, I will deploy a workload TKG in order to authenticate to it using a directory user (nsxbaas@nsxbaas.homelab) and will assign a role of cluster viewer to this user. This means that using this user I will only be able to list and get information about running pods, deployments, etc in this workload cluster.

I used the following YAML file to deploy my workload cluster:

#! --------------------------------------------------------------------- #! Basic cluster creation configuration #! --------------------------------------------------------------------- CLUSTER_NAME: tkg-wld01 CLUSTER_PLAN: dev NAMESPACE: default CNI: antrea IDENTITY_MANAGEMENT_TYPE: oidc INFRASTRUCTURE_PROVIDER: vsphere #! --------------------------------------------------------------------- #! Node configuration #! --------------------------------------------------------------------- # SIZE: # CONTROLPLANE_SIZE: # WORKER_SIZE: VSPHERE_NUM_CPUS: 2 VSPHERE_DISK_GIB: 40 VSPHERE_MEM_MIB: 4096 VSPHERE_CONTROL_PLANE_NUM_CPUS: 2 VSPHERE_CONTROL_PLANE_DISK_GIB: 40 VSPHERE_CONTROL_PLANE_MEM_MIB: 8192 VSPHERE_WORKER_NUM_CPUS: 2 VSPHERE_WORKER_DISK_GIB: 40 VSPHERE_WORKER_MEM_MIB: 4096 CONTROL_PLANE_MACHINE_COUNT: 1 WORKER_MACHINE_COUNT: 1 #! --------------------------------------------------------------------- #! vSphere configuration #! --------------------------------------------------------------------- VSPHERE_NETWORK: /Homelab/network/TKG-MGMT-WLD-NET # VSPHERE_TEMPLATE: VSPHERE_SSH_AUTHORIZED_KEY: none VSPHERE_USERNAME: administrator@vsphere.local VSPHERE_PASSWORD: VMware1! VSPHERE_SERVER: vc-l-01a.nsxbaas.homelab VSPHERE_DATACENTER: /Homelab VSPHERE_RESOURCE_POOL: /Homelab/host/Kitkat/Resources/TKG VSPHERE_DATASTORE: /Homelab/datastore/DS01 # VSPHERE_STORAGE_POLICY_ID VSPHERE_FOLDER: /Homelab/vm/TKG VSPHERE_TLS_THUMBPRINT: "" VSPHERE_INSECURE: true # VSPHERE_CONTROL_PLANE_ENDPOINT: # Required for Kube-Vip # VSPHERE_CONTROL_PLANE_ENDPOINT_PORT: 6443 AVI_CONTROL_PLANE_HA_PROVIDER: true #! --------------------------------------------------------------------- #! Machine Health Check configuration #! --------------------------------------------------------------------- ENABLE_MHC: ENABLE_MHC_CONTROL_PLANE: true ENABLE_MHC_WORKER_NODE: true MHC_UNKNOWN_STATUS_TIMEOUT: 5m MHC_FALSE_STATUS_TIMEOUT: 12m ENABLE_AUDIT_LOGGING: true ENABLE_DEFAULT_STORAGE_CLASS: true CLUSTER_CIDR: 100.86.0.0/16 SERVICE_CIDR: 100.66.0.0/16 OS_ARCH: amd64 OS_NAME: photon OS_VERSION: "3" ENABLE_AUTOSCALER: false

Just before you deploy the above, make sure to unset the following env variables:

unset _TKG_CLUSTER_FORCE_ROLE unset FILTER_BY_ADDON_TYPE unset TANZU_CLI_PINNIPED_AUTH_LOGIN_SKIP_BROWSER

I then created my workload cluster as shown below

Now lets switch to the admin context of workload cluster and view the available kubernetes cluster roles

The highlighted “view” at the bottom is a built-in read-only view cluster role and this is what I am going to assign to my user nsxbaas@nsxbaas.homelab using cluster role binding as shown below

At this step we are ready to authenticate to our workload cluster using the user nsxbaas@nsxbaas.homelab and the kubeconfig file we generated.

Login to Workload Cluster with OIDC user and Verify Permissions

To test OIDC authentication and user permissions, I will use the command “kubectl get pods -A –kubeconfig tkg-wld01-kubeconfig” this should generate a kubeconfig file for our workload clusters which we can share with the user who is allowed only view permissions.

To test our scenario, I will run the command “kubectl get pods -A –kubeconfig tkg-wld01-kubeconfig” we should see a response with a URL which we need to copy and paste in a web browser

Copy and Paste the URL in a browser then you should receive login code:

Paste the code and you should see the output of kubectl get pods -A

Hope you have found this post useful.